Getting Started with JMeter: A Beginner's Guide to Performance Testing Distributed Systems

May 25, 2024

This article provides a comprehensive beginner's guide to using Apache JMeter for performance testing of distributed systems. It covers the history and significance of JMeter, outlines a sample distributed system architecture, and details step-by-step instructions for setting up and running JMeter tests. Key metrics such as response time, throughput, and resource utilization are explained, along with methods for identifying and resolving performance bottlenecks and saturated resources. Practical techniques for optimizing system performance are also discussed, making this guide an essential resource for developers and testers looking to ensure their applications can handle expected user loads efficiently.

Introduction to JMeter

Apache JMeter is an open-source tool designed for performance testing, load testing, and stress testing of web applications and other services. First developed by Stefano Mazzocchi of the Apache Software Foundation in 1998, JMeter has since evolved into a powerful, flexible tool used by developers and testers worldwide.

The name "JMeter" was coined from its initial purpose: to test and measure the performance of Java applications (hence the "J" in JMeter). Over the years, it has grown to support a wide variety of protocols beyond Java, making it a versatile tool for performance testing.

JMeter is particularly useful because it can simulate a heavy load on a server, group of servers, network, or object to test its strength and analyze overall performance under different load types. It supports various protocols, such as HTTP, HTTPS, FTP, JDBC, and more, making it a versatile choice for performance testing across different types of applications.

Apache JMeter is an invaluable tool for performance engineers as it enables them to simulate real-world user behavior and measure the performance of web applications and services under various load conditions. By creating detailed test plans with multiple users, different types of requests, and realistic scenarios, JMeter helps identify performance bottlenecks, scalability issues, and capacity limits. Its extensive range of samplers, listeners, and assertions allows for thorough testing and analysis, ensuring that applications can handle expected and peak loads effectively. Ultimately, JMeter provides the insights needed to optimize performance, enhance user experience, and ensure system reliability.

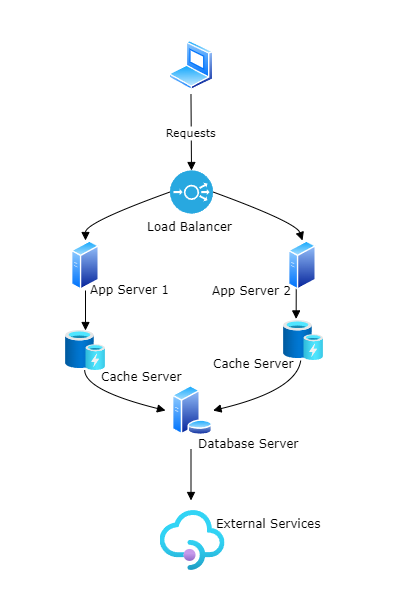

Sample Architecture for a Distributed System

Components:

- Client Layer: The user interface that interacts with the users.

- Load Balancer: Distributes incoming requests to multiple application servers to ensure no single server is overwhelmed.

- Application Servers: The servers that handle the business logic of the application.

- Database Server: The server where the data is stored and managed.

- Cache Server: A server that caches frequently accessed data to improve performance.

- External Services: Third-party services that the application may depend on (e.g., payment gateways, authentication services).

Diagram:

Setting Up JMeter to Test This Architecture

Now, let's walk through the steps to set up JMeter to test the performance of the above distributed system.

Step 1: Define the Test Plan

-

Open JMeter:

- Start JMeter by navigating to the

bindirectory and runningjmeter.bat(Windows) or./jmeter(Mac/Linux).

- Start JMeter by navigating to the

-

Create a New Test Plan:

- File > New > Test Plan.

- Rename the Test Plan (e.g., "Distributed System Performance Test").

Step 2: Thread Group Configuration

-

Add a Thread Group:

- Right-click on the Test Plan > Add > Threads (Users) > Thread Group.

- Rename the Thread Group (e.g., "User Simulation").

-

Configure Thread Group:

- Number of Threads (Users): Set the number of virtual users to simulate.

- Ramp-Up Period (seconds): Time over which the users will start. For example, 100 users with a ramp-up period of 100 seconds means 1 user will start every second.

- Loop Count: Number of times to execute the test. Use a high number for continuous load testing.

Step 3: HTTP Request Samplers

-

Add HTTP Request Samplers:

- Right-click on the Thread Group > Add > Sampler > HTTP Request.

- Configure each HTTP Request to simulate different user actions. Example actions: Login, Fetch Data, Submit Form.

-

Configure HTTP Requests:

- Login Request:

- Name: "Login"

- Server Name or IP:

www.example.com - Path:

/login - Method:

POST - Parameters: Add necessary parameters (e.g., username, password).

- Fetch Data Request:

- Name: "Fetch Data"

- Server Name or IP:

www.example.com - Path:

/fetchData - Method:

GET - Parameters: Add necessary parameters (e.g., userId).

- Submit Form Request:

- Name: "Submit Form"

- Server Name or IP:

www.example.com - Path:

/submitForm - Method:

POST - Parameters: Add necessary parameters (e.g., form data).

- Login Request:

Step 4: Logic Controllers

Add Logic Controllers to Simulate Realistic User Behavior:

- Loop Controller:

- Right-click on the Thread Group > Add > Logic Controller > Loop Controller.

- Set the loop count to simulate multiple actions per user.

- If Controller:

- Right-click on the Thread Group > Add > Logic Controller > If Controller.

- Specify a condition to execute certain requests conditionally. For example, you can simulate conditional form submissions based on data values.

- (e.g.,

${JMeterVariable} == "value").

Step 5: Listeners

- Add Listeners to Collect Data:

- View Results Tree:

- Right-click on the Thread Group > Add > Listener > View Results Tree.

- Summary Report:

- Right-click on the Thread Group > Add > Listener > Summary Report.

- Aggregate Report:

- Right-click on the Thread Group > Add > Listener > Aggregate Report.

- Backend Listener:

- Add a Backend Listener to send metrics to a monitoring tool like Grafana for real-time visualization.

- View Results Tree:

Step 6: Assertions

- Add Assertions to Validate Responses:

- Response Assertion:

- Right-click on an HTTP Request > Add > Assertions > Response Assertion.

- Configure to check for expected response content or status codes.

- Response Assertion:

Step 7: Configuration Elements

- Add Configuration Elements for Default Settings and Variables:

- HTTP Request Defaults:

- Right-click on the Thread Group > Add > Config Element > HTTP Request Defaults.

- Set default values for server name, port, and protocol.

- User Defined Variables:

- Right-click on the Test Plan > Add > Config Element > User Defined Variables.

- Define variables for reuse across requests (e.g., baseURL, common parameters).

- HTTP Request Defaults:

Step 8: Timers

- Add Timers to Simulate Real-World Delays:

- Constant Timer:

- Right-click on the Thread Group > Add > Timer > Constant Timer.

- Set a fixed delay between requests.

- Uniform Random Timer:

- Right-click on the Thread Group > Add > Timer > Uniform Random Timer.

- Configure a random delay range to mimic varying user think times.

- Constant Timer:

Running the Test

-

Non-GUI Mode: For large-scale testing, use non-GUI mode to save resources:

jmeter -n -t testplan.jmx -l results.jtl -

Distributed Testing:

- Set up JMeter in a Distributed Environment:

- Start JMeter servers (slaves) on different machines by running

jmeter-server. - Configure the

jmeter.propertiesfile on the master machine to include the IP addresses of the slave machines. - Run the test from the master machine using:

jmeter -n -t testplan.jmx -r - The

-rflag runs the test in remote mode.

- Start JMeter servers (slaves) on different machines by running

- Set up JMeter in a Distributed Environment:

Conducting a Load Analysis

After running the test, the next crucial step is to analyze the results to understand the performance and identify potential bottlenecks.

Step 1: Collect Data

-

Open Results:

- Open the results file (

results.jtl) in JMeter GUI or import it into your preferred analysis tool.

- Open the results file (

-

Use Listeners:

- View results using Listeners like View Results Tree, Summary Report, Aggregate Report, etc.

Step 2: Analyze Key Metrics

-

Response Time:

- Measure the time taken for requests to be processed.

- Average Response Time: The average time taken for all requests.

- 95th Percentile Response Time: Time below which 95% of the requests were completed, which helps identify the tail latency.

-

Throughput:

- Number of requests processed per unit time.

- Higher throughput indicates the system can handle a larger load.

-

Error Rate:

- Percentage of failed requests.

- Analyze the reasons for errors (e.g., server overload, configuration issues).

-

Server Resource Utilization:

- Monitor CPU, memory, and network usage on the servers.

- High utilization may indicate the need for resource optimization or scaling.

Understanding Key Metrics

Response Time

Response time is a measure of how long it takes for a server to process a request and return a response to the client. It is a critical metric for understanding user experience, as slow response times can lead to user dissatisfaction.

- Average Response Time: The mean time taken for all requests during the test period.

- 95th Percentile Response Time: The response time below which 95% of the requests fall. This helps identify the tail latency and can highlight performance issues affecting a smaller subset of users.

Throughput

Throughput is the number of requests processed by the server per unit time, typically measured in requests per second (RPS). It indicates the server's ability to handle load and is essential for understanding the capacity of the system.

- Higher throughput means the system can handle a larger number of requests simultaneously.

Utilization

Utilization refers to the percentage of total capacity being used by the server resources (CPU, memory, network). Monitoring utilization helps identify if the servers are overworked or underutilized.

- High utilization may suggest that the servers are close to their maximum capacity, which can lead to performance degradation.

- Low utilization might indicate that resources are underutilized, possibly pointing to inefficiencies in the system.

Collecting Metrics with JMeter

Response Time

Response time is the duration taken to complete a request. It is a key performance indicator that shows how long a user must wait for a response.

-

View Results Tree Listener:

- Add a View Results Tree listener to your Thread Group.

- This listener provides detailed information about each request, including the response time for each request.

Steps:

- Right-click on the Thread Group > Add > Listener > View Results Tree.

- Run your test.

- After the test, view the response time for each request in the View Results Tree.

-

Summary Report:

- A Summary Report listener aggregates data and provides metrics like average, minimum, and maximum response times.

Steps:

- Right-click on the Thread Group > Add > Listener > Summary Report.

- Run your test.

- View the report to see average, minimum, and maximum response times.

-

Aggregate Report:

- An Aggregate Report listener provides similar metrics but is often more detailed.

Steps:

- Right-click on the Thread Group > Add > Listener > Aggregate Report.

- Run your test.

- View the report to see response time statistics.

Throughput

Throughput measures the number of requests processed by the server per unit time, usually requests per second.

-

Summary Report:

- The Summary Report listener also provides throughput information, showing how many requests were processed per second.

Steps:

- Right-click on the Thread Group > Add > Listener > Summary Report.

- Run your test.

- View the throughput column in the report.

-

Aggregate Report:

- The Aggregate Report listener also provides throughput metrics.

Steps:

- Right-click on the Thread Group > Add > Listener > Aggregate Report.

- Run your test.

- View the throughput column in the report.

-

Backend Listener:

- A Backend Listener can be used to send metrics to a monitoring tool like Grafana, which can display throughput over time.

Steps:

- Right-click on the Thread Group > Add > Listener > Backend Listener.

- Configure it to send data to your monitoring tool.

- View throughput metrics in real-time on your monitoring dashboard.

Utilization

Utilization refers to the usage levels of various resources such as CPU, memory, and network. JMeter does not directly monitor these server-side metrics, but it can be integrated with other tools to gather this information.

-

JMeter Plugins:

- Use the JMeter Plugins Manager to install plugins that can monitor server-side metrics.

Steps:

- Install the JMeter Plugins Manager.

- Install plugins like PerfMon Metrics Collector.

- Configure the PerfMon Metrics Collector to connect to a PerfMon server running on your application servers.

-

PerfMon Metrics Collector:

- This plugin collects server-side metrics like CPU, memory, and network usage.

Steps:

- Right-click on the Thread Group > Add > Listener > jp@gc - PerfMon Metrics Collector.

- Configure the PerfMon Metrics Collector with the server details and the metrics you want to collect.

- Run your test and view the collected server metrics in real-time or post-test.

-

External Monitoring Tools:

- Integrate JMeter with external monitoring tools like Grafana, Prometheus, or Nagios.

Steps:

- Set up an external monitoring tool on your servers.

- Use JMeter's Backend Listener to send JMeter metrics to these tools.

- Correlate JMeter metrics with server metrics in the external monitoring tool's dashboard.

Example: Collecting Metrics in a Distributed System Test

Let's use an example to illustrate how to collect and analyze these metrics in a distributed system test.

Setup

-

Thread Group:

- Simulate 200 users with a ramp-up period of 200 seconds.

- Include HTTP Request Samplers for Login, Fetch Data, and Submit Form actions.

-

Listeners:

- Add View Results Tree, Summary Report, Aggregate Report, and PerfMon Metrics Collector listeners.

-

Configuration Elements:

- Use CSV Data Set Config for parameterization.

- Set default values using HTTP Request Defaults.

-

Timers:

- Add Constant Timer and Uniform Random Timer to simulate real-world delays.

Running the Test

-

Start JMeter:

- Run the test plan in non-GUI mode for better performance:

jmeter -n -t testplan.jmx -l results.jtl

- Run the test plan in non-GUI mode for better performance:

-

Monitor Real-Time Metrics:

- Use the Backend Listener to send metrics to Grafana for real-time visualization.

-

Collect Server Metrics:

- Ensure the PerfMon server is running on the application servers to collect utilization metrics.

- View CPU, memory, and network utilization using the PerfMon Metrics Collector.

Analyzing the Results

-

Response Time:

- Open the Summary Report and Aggregate Report to analyze response times.

- Check the 95th percentile to identify any tail latency issues.

-

Throughput:

- Review the throughput metrics in the Summary Report and Aggregate Report.

- Monitor the throughput graph in Grafana to observe trends over time.

-

Utilization:

- Analyze server utilization metrics collected by the PerfMon Metrics Collector.

- Correlate high response times or low throughput with periods of high CPU or memory usage.

Example Analysis

-

Response Time Analysis:

- Identify any spikes in response time during peak load periods.

- Determine if high response times correlate with high CPU or memory usage.

-

Throughput Analysis:

- Ensure that throughput remains stable as the number of users increases.

- Identify any drop in throughput and correlate it with server utilization metrics.

-

Utilization Analysis:

- Monitor CPU, memory, and network utilization to identify any resource bottlenecks.

- Determine if additional resources are needed or if optimizations can be made to the existing infrastructure.

Identifying Bottlenecks and Saturated Resources

Saturated Resources

Saturated resources occur when a system component (CPU, memory, or network) is operating at or near its maximum capacity. This condition can severely impact the performance and responsiveness of the application.

Bottlenecks

A bottleneck is a point in the system where the flow of data is limited or slowed down, causing performance issues. Identifying bottlenecks is crucial for optimizing system performance.

How to Measure and Identify with JMeter

-

Resource Monitoring:

- Use tools like JMeter Plugins, Grafana, or other monitoring tools to track CPU, memory, and network utilization.

- Monitor these metrics during the load test to identify when resources become saturated.

-

Analyze Response Times:

- Identify requests with high response times using JMeter's listeners.

- Correlate high response times with periods of high resource utilization to pinpoint potential bottlenecks.

-

Throughput Analysis:

- Track throughput to see if it drops when resource utilization is high.

- A decrease in throughput with high resource usage indicates that the system is struggling to handle the load.

Techniques to Solve Saturated Resources and Bottlenecks

Optimize Code and Queries

-

Optimize Application Code:

- Refactor inefficient code.

- Use efficient algorithms and data structures.

-

Optimize Database Queries:

- Use indexing to speed up query execution.

- Optimize SQL queries to reduce load on the database.

Scaling

-

Vertical Scaling:

- Increase the resources (CPU, memory) of existing servers to handle more load.

-

Horizontal Scaling:

- Add more servers to distribute the load.

- Use load balancers to evenly distribute requests across multiple servers.

Caching

-

Implement Caching:

- Use caching mechanisms to store frequently accessed data.

- Reduce the load on the database by serving cached data.

-

Optimize Cache Usage:

- Ensure that cache is properly invalidated and refreshed to maintain data consistency.

Load Balancing

-

Use Load Balancers:

- Distribute incoming requests evenly across multiple servers.

- Prevent any single server from becoming a bottleneck.

-

Configure Load Balancer Settings:

- Fine-tune load balancer settings for optimal performance.

- Use techniques like round-robin, least connections, or IP hash for load distribution.

Performance Tuning

-

Server Configuration:

- Optimize server configurations for better performance.

- Adjust parameters like thread pools, connection timeouts, and memory settings.

-

Network Optimization:

- Optimize network configurations to reduce latency.

- Use content delivery networks (CDNs) to serve content closer to users.

Conclusion

By following this detailed guide, you can set up JMeter to analyze the performance of a distributed system effectively. Understanding key metrics like response time, throughput, and utilization helps identify bottlenecks and saturated resources. Implementing optimization techniques ensures that your system can handle expected user load efficiently.

For further learning, refer to the official JMeter documentation and join communities and forums to stay updated with best practices and new features. Happy testing!